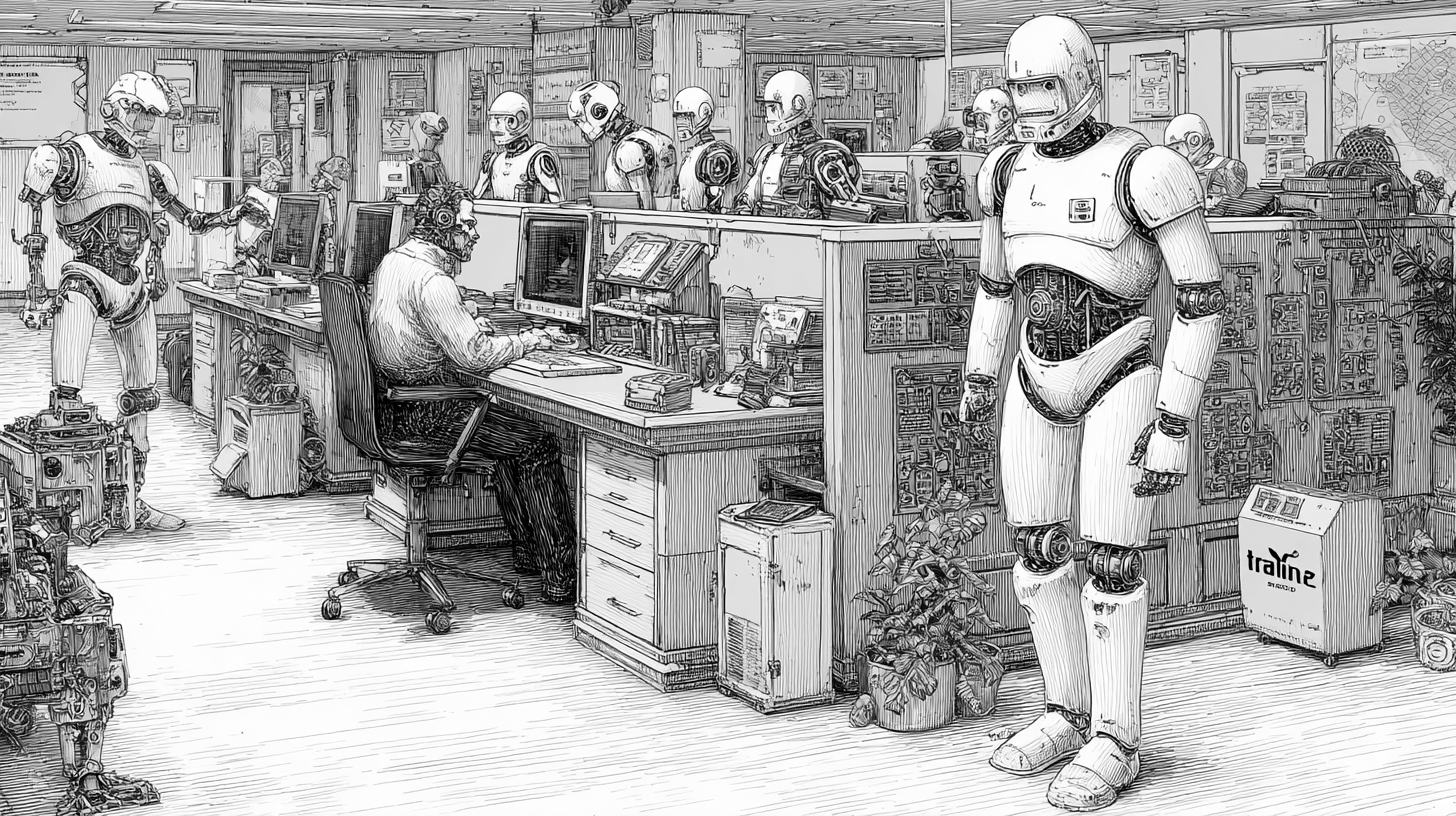

Most teams adopting AI agents make the same mistake: they treat them like interns.

Give the agent a task. Wait for the output. Review it. Give feedback. Repeat. It works, sort of, but it misses the point entirely. You've just added a slow employee who needs constant supervision.

The intern mental model is broken

When you think "intern", you think in terms of delegation. You have a task. You hand it off. You quality-check the result. The bottleneck is still you -- your time, your attention, your ability to define and review tasks.

That model scales linearly at best. More agents means more supervision, which means diminishing returns fast.

The teams getting 10x output aren't managing agents. They're designing systems where agents run loops autonomously.

Think in loops, not tasks

The shift is from "do this thing" to "run this process continuously." Instead of assigning tasks, you're designing feedback loops that agents execute without you in the critical path.

Example: market research at [r]think

Intern model: "Research competitors in the Australian insurtech space and write a summary."

Loop model: When we built deetech, we did not assign an agent a one-off research task. We set up a loop that continuously monitors insurer pricing changes, new claims technology patents, regulatory filings, and competitor job postings. It flags anomalies weekly. We review a dashboard, not a document -- and the dashboard gets smarter every cycle because the agent learns what we actually act on.

Example: content pipeline

Intern model: "Write a blog post about venture studios."

Loop model: An agent scans internal notes, meeting transcripts, and customer conversations for recurring themes. It drafts outlines when it detects a pattern. You shape the narrative; the agent handles the assembly. The output is not "content" -- it is a continuously updated map of what your team is actually thinking about.

Design principles for agent systems

We've learned a few things building agent workflows across our ventures:

- Define the loop, not the task. What input triggers the agent? What output does it produce? Where does that output feed next?

- Minimise human-in-the-loop. Every time a human has to review or approve, you've added latency and created a bottleneck. Reserve human judgment for high-stakes decisions.

- Build checkpoints, not checkups. Instead of reviewing every output, set thresholds. The agent runs until something exceeds a threshold, then it escalates.

- Stack loops. The output of one agent loop becomes the input of another. Discovery feeds into build. Build feeds into validation. This is where compounding happens.

The operator's role changes

In an agent-first team, the operator's job shifts from "manager of tasks" to "architect of systems." You're not reviewing documents -- you're designing workflows, setting thresholds, and making the hard calls that agents can't.

This is a fundamental shift. It's less about how fast you can work and more about how well you can design the machine that does the work.

Start small, think in systems

You don't need to rebuild everything overnight. Pick one workflow that's currently manual and repetitive. Design it as a loop. Let an agent run it for a week. Measure the output.

Then stack another loop on top.

That's how you get to 10x. Not by hiring 10 interns, but by building one machine that compounds.